Automated web scraping to extract data is usually a simple task, especially when the webpage to be scraped is a simple HTML page without any JavaScript functions running in the background. But what do you do when the page to be scraped contains content that is dynamically rendered i.e. the page contains interactive HTML capabilities? A dynamically rendered page in the context of HTML is a webpage that contains content that is activated, or changed, depending on parameters inputted by the site user e.g. timing, clicks etc. These parameters determine what content is delivered to the user and require interaction before the content becomes available or accessible.

For a task like this, we cannot rely only on simple web scraping libraries like requests and BeautifulSoup because they work with static websites that load and display the content on the page load. Any content that appears after the page is loaded is inaccessible to these libraries.

Tasks like this require you to pull out the big guns.

Enter Selenium to the rescue.

Selenium is a browser automation tool used for running tests on a website on different types of browsers. With Selenium, we can automate navigating and interacting with a website, replicating how a human user would. This is how we are able to extract dynamically rendered content on a website.

Let’s see Selenium in action by using it to web scrape the YouTube channel of one of YouTube’s biggest stars, Mr Beast. We would be scraping the name of each video, the number of views and the day it was posted.

Project

Web scrape the video analytics from Mr Beast’s YouTube channel and save the output into a JSON file.

Solution

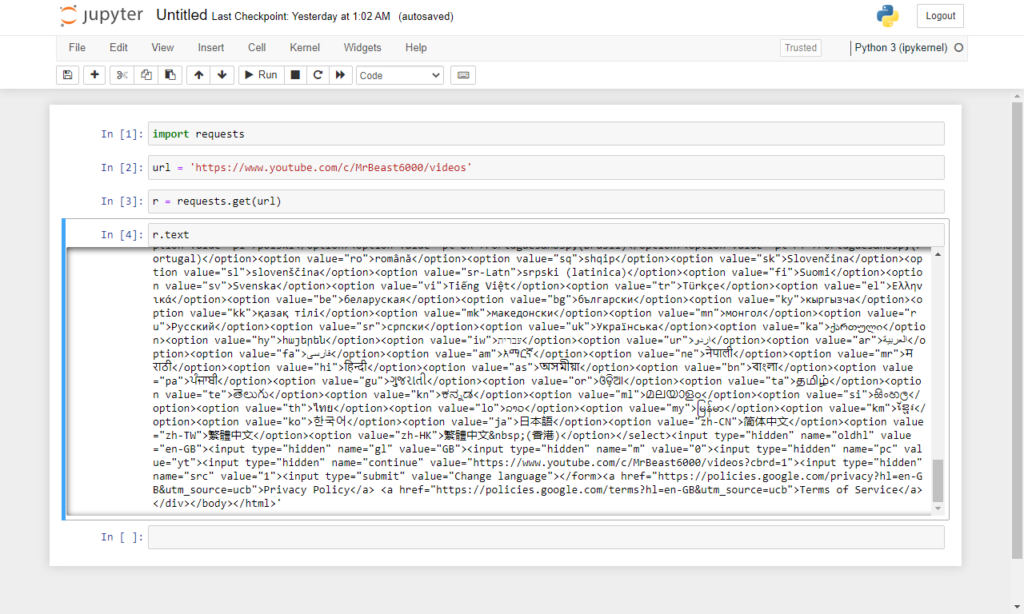

Before starting the project with Selenium, let’s take a look at the output when we try to parse the YouTube channel page with requests and BeautifulSoup.

The reason we are unable to access the data we need is that YouTube dynamically loads its content and the dynamic JavaScript required to access the data has not been executed.

On to Selenium.

After installing Selenium into our Python environment, we need to import webdriver and the Keys module from the Selenium package.

from selenium import webdriver

from selenium.webdriver.common.keys import KeysThe URL for Mr’s Beast web channel is ‘https://www.youtube.com/c/MrBeast6000/videos’.

Like every web scraping project, the first task involves sending a request to the web page. To do this with Selenium, the webdriver will be used to send a GET request to the URL.

url = 'https://www.youtube.com/c/MrBeast6000/videos'

driver = webdriver.Chrome()

driver.get(url)If the Chromium webdriver is installed successfully and we run this script at this point, this would automate a Chrome browser to start and point to our URL.

Looking at the automated Chrome browser, we notice that the URL has redirected to a ‘Before You Continue to YouTube‘ page which requires that we agree to either accept all or reject all cookies. This is where Selenium is handy because it can be used to navigate and interact on the page like a real human.

The human behaviour that needs automating on this page is ‘wait for the page to load‘ and ‘click on the REJECT ALL button‘. To wait, we import the time library and use a sleep method, with an argument of 5 seconds. To automate clicking on the REJECT ALL button, we have to parse through the page to find the button, set the button to a variable and use a ‘click‘ method on the variable.

We’ll find the button using the find_element_by_xpath method and name the variable reject_all.

import time

time.sleep(5)

reject_all = driver.find_element_by_xpath('//*[@id="yDmH0d"]/c-wiz/div/div/div/div[2]/div[1]/div[3]/div[1]/form[1]/div/div/button')

reject_all.click()Run the script again.

Like programming magic, the REJECT ALL button is clicked and the page redirects to Mr Beast’s YouTube channel with the videos on his channel.

Notice that the channel currently shows only 30 videos. But Mr Beast did not amass a cult following of over 16,054,105,696 views by posting only 30 videos. The reason his other videos are currently not displayed is that we need to scroll down (ie navigate) for YouTube to dynamically render, load and display all the video content he has posted.

In other words, we need to automate the process of navigating down to the bottom of the browser.

Slightly tricky and requires some deft manoeuvring.

To work around this, let’s use a while loop that compares the height of the page at the start of the loop (call this variable height) with the height of the page at the end (call this variable new_height) after automating a page down scroll. If height and new_height values are the same, the loop ends ie the loop ends only when the height at the start is the same as new_height.

time.sleep(5)

height = driver.execute_script("return document.documentElement.scrollHeight")

while True:

time.sleep(1)

driver.find_element_by_tag_name('body').send_keys(Keys.END)

time.sleep(3)

new_height = driver.execute_script("return document.documentElement.scrollHeight")

if height == new_height:

break

else:

height = new_heightRun the script again and now all the videos are loaded.

With all the videos loaded on the page, it’s on to the second task in web scraping, which is parsing through the content. The Inspect Element tab shows that the video thumbnail details (name, views and date posted) can be found in a div tag with a unique class name ‘style-scope ytd-grid-video-renderer‘. We’ll create an object that collects all web elements with the class name ‘style-scope ytd-grid-video-renderer’ into a list, then use a for loop to extract the details we need for each element. With each data extracted, all that’s left is to create a dictionary and proceed to save these values in a key::value pair for each video in the loop.

videos = driver.find_elements_by_class_name('style-scope ytd-grid-video-renderer')

videos_analytics = list()

for video in videos:

video_title = video.find_element_by_xpath('.//*[@id="video-title"]').text.title()

views = video.find_element_by_xpath('.//*[@id="metadata-line"]/span[1]').text

day_posted = video.find_element_by_xpath('.//*[@id="metadata-line"]/span[2]').text

link = video.find_element_by_xpath('.//*[@id="video-title"]').get_attribute('href')

print(video_title, views, link, day_posted)

video_analytics = {

'video_title': video_title,

'views': views,

'day_posted': day_posted,

'link': link,

}

videos_analytics.append(video_analytics)The output is ready to go and it’s on to the last task of saving the output. The output can be saved in JSON using the dump method from python’s JSON module. It wouldn’t hurt to also save the output in a CSV file using the pandas library.

import json

import pandas as pd

df = pd.DataFrame(videos_analytics)

df.to_csv('beast_video_analytics.csv')

with open('beast_video_analytics.json', 'w') as f:

json.dump(videos_analytics, f, indent=2)

July 14, 2022 -

It’s difficult to find well-informed people for this

topic, but you seem like you know what you’re talking

about! Thanks

my blog … Twicsy

September 6, 2022 -

Thanks for your blog, nice to read. Do not stop.